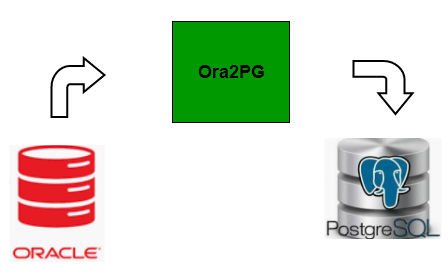

Oracle to PostgreSQL database migration using ora2pg

This is part of a series of articles I am going to write about my experiences of oracle to PostgreSQL migration. Here I will cover the ora2pg tool used for data migration.

These are the sequence of high level steps to be followed where each of them will be covered in detail on upcoming articles.

1. Generate PG schema

2. Prepare PG database server

3. Data migration

4. Data validation

5. PG backup and log archiving

Lets deep dive into the step number 3 data migration with ora2pg.

Ora2pg is a open source free tool but yet it is very reliable and actively supported by the developer.

Generally ora2pg can be installed in either oracle or pg sever. But, It is good to have a separate server for the ora2pg tool for large databases so that you don’t have to mess up with installing additional tools in your production database servers. And you can simply delete ora2pg server/vm after the migration without installing unwanted ora2og binaries on PostgreSQL server which we are going to use one time during initial migration.

There will be 3 separate servers(or vms) for Oracle, PostgreSQL and ora2pg as illustrated in the diagram.

These are the key settings you should pay attention while performing the data migration.

- Connection settings. Add the oracle server name, username and password accordingly

ORACLE_DSN dbi:Oracle:host=<hostname>;sid=<SID>;port=1521

ORACLE_USER <username>

ORACLE_PWD <password>- You already have the schema created by now, so disable the schema creation entry

EXPORT_SCHEMA 0- Set the schema name of your oracle server

SCHEMA <schema name>- Disable the tablespace creation. It is recommended to create tablespace upfront as I covered in the other article

USE_TABLESPACE 0- Ensure partitioning is enabled during the data migration. Specially, this is important for larger databases. Ora2pg has limitation of 4 billion rows per table. If you have a table with more than 4 billion rows, its most likely a partitioned table. If you disable the partitioning directive, ora2pg will consider the large partition table as a single entity and it will transfer as a whole. If you enable partitioning, each partition and sub-partitions are transferred as different tables. So that you can avoid 4 billion limitation on top of performance improvement

DISABLE_PARTITION 0- PG connection settings

PG_DSN dbi:Pg:dbname=<dbname>;host=<pg hostname>;port=5432

PG_USER <username>

PG_PWD <password>- Select where the log files of migration should be stored

OUTPUT ora2pg.log

OUTPUT_DIR /var/logs/- You can choose to enable stop on error so that data migration will cancel if it encounter any errors during the migration. Of course you will have to perform the migration again for that failed table by dropping it from PG side. For the larger tables, its recommended to start separate ora2pg processes individually, so that you will only have to restart the failed table instead of all the tables.

STOP_ON_ERROR 1- If you choose to continue on error, enable this parameter. It will log all the insert transactions in a file, so that you can process them separately.

LOG_ON_ERROR 1- Select the PG version you are migrating to. Needless to say, use the latest or one before latest stable version at the time you plan for migration

PG_VERSION 11Lets talk about the pg indexes. You can either create pg indexes before or after the migration. But I recommend to create indexes after completing the data migration specially for large databases with terabyte of data. Simply because data transfer is faster if PG doesn’t have to update the indexes during the data inserts. Let’s assume data migration takes 24 hours and index creation takes another 24 hours. If you create indexes upfront, data migration with indexes will complete approximately in 2 days. But if the migration fails on the latter part, you will have to start from scratch and lose 2 days instead of 1 day.

Here are some tips to make sure underline hardware infrastructure in place for the migration.

Ensure reliable network connectivity with reasonable bandwidth available between oracle, ora2pg and pg servers. All 3 of them on same network or same geographical location is highly advised. If the db size is in terabytes, better to have 10Gbps network between them, but minimum 1Gbps is recommended.

Its always difficult upsize cpu specs of oracle due to license constraints but ensure ora2pg and pg servers have adequate cpu and memory resources.

Here are the key directives you should carefully assign to get the maximum data transfer speed. I will explain here a scenario, you may have to modify them based on your system requirements. I am not going to explain the purpose of each of these directives, please refer the official documentation for detailed explanation.

- -J and -L decides the speed of data extraction from oracle. If you have multiple tables to transfer same time, choose -L instead of -J.

- Theoretically, -j x -L(-J) should equal or less than to your ora2pg server’s core count. Which means, if you have 24 cores in ora2pg server, -j = 6 and -J =4 are the suggested values.

- But as per my experience, you can try with -j 20 and -L 10 if you have 24 cores in ora2pg server. You may have to run some tests and find the optimal values for your environment. I have seen close to 400, 000 rows per second transfer speed but it really depends on underline hardware and network performance.

- Then -L 50,000 is a better value for above mentioned -J and -j values. If you don’t see any memory bottleneck in ora2pg server, you can try increasing this value.

Above mentioned parameters can be configured in ora2pg.conf file, but suggest to use those as parameters with ora2pg command so that you can modify them easily for different databases or environments. Before you start the migration, ensure no other users or apps connected to the oracle database and insert/modify data during migration. Its best to mount oracle database in read only mode before start the migration.

Here is how you can initiate the ora2pg data migration.

ora2pg -t COPY -J 10 -j 20 -L 50000 -c ora2pg.conf -a "<Table Names>"Of course, you should run it in background and redirect the output to a file. Or you may write a bash wrapper script.

nohup ora2pg -t COPY -J 10 -j 20 -L 50000 -c ora2pg.conf -a "<Table Names>" > /log/file 2>&1 &You can see the migration progress from the /log/file log file which will gives the transfer speed and percentage of data migration. Its important to note that this percentage calculated based on the total row counts in oracle table verses no of rows migrated. However, total row count of oracle table is taken from the oracle statistics so this might not be very accurate. This is why you may notice that data migration will not complete at 100% instead less or more than that.

If you see any issues, find out the root cause, apply the fixes and rerun the migration again. You have to drop the table from pg before rerun it. If migration completed without any errors, you are good to calculate the row count of tables on oracle and PG side and do the comparison. This all for this article, I will cover more on data validation in next one. Thanks for reading.